Contact centers are adopting AI at record speed, but most leaders still use “LLM” and “generative AI” interchangeably. The distinction matters—especially when accuracy, latency, compliance, and cost-per-call hang in the balance. This guide breaks down LLM vs generative AI in the context of real workflows, showing when each model shines and when you need something different under the hood.

With the landscape set, we can now dive into how these model families differ at a fundamental level.

Map LLM vs GenAI impact across workflows.

Difference Between LLM and Generative AI in the Context of Call Center Enhancement

Generative AI is the umbrella category: models that create output from patterns, text, audio, images, or structured predictions. LLMs sit inside that umbrella. They specialize in language: conversation understanding, text generation, summarization, and reasoning over long inputs.

In practice, this means LLMs are not always the best choice. Sometimes you need a lightweight model that predicts intent. Sometimes you need an acoustic model tuned to detect silence or tone shifts.

Check your AI gaps with a quick diagnostic.

Why LLM vs Generative AI Distinction Matters Now

Modern call centers operate under higher volumes, tighter regulations, and rising expectations for real-time guidance. Against that backdrop, the nuances between LLMs and other generative models decide whether an AI investment becomes a breakthrough or a bottleneck. This section grounds the conversation in today’s environment and explains why architecture choices suddenly matter.

What Changed in Contact Centers

Contact centers are managing more channels, more compliance oversight, and more agents who expect AI support in every interaction. Leaders are looking for automation that reduces handle time, removes repetitive QA work, and brings consistency to coaching. At the same time, infrastructure teams are wrestling with latency budgets, data privacy obligations, and rising API costs.

Because of these pressures, the “AI model choice” is no longer an abstract decision. It directly affects per-call cost, customer experience, regulatory exposure, and agent trust.

Understanding the landscape sets up the core question: which model type, LLM or other generative AI, maps cleanly to which task?

Under-the-Hood Mechanics

LLMs use transformer architectures trained on massive text corpora, enabling them to reason across long sequences and generate natural language. Other generative models may focus on acoustic features, structured signals, or smaller domain datasets. They often trade reasoning power for speed, determinism, or cost efficiency.

For contact centers, this difference becomes critical: one model interprets conversation context, another identifies dead air, another scores compliance risk.

When you understand mechanics, you begin to match model design to workflow needs instead of using one model everywhere.

Performance Tradeoffs

LLMs excel at reasoning but carry higher latency, risk of hallucinations, and cost-per-token usage. Smaller generative models offer predictability and lower cost but lack deep contextual understanding. Leaders must evaluate accuracy tolerance, compliance exposure, and volume scale.

In contact centers where every second and every inference cost counts, these tradeoffs define your architecture.

Performance isn’t just theory; it's what determines whether AI actually scales across millions of calls.

Scan your workflows for LLM overuse.

This blog is just the start.

Unlock the power of Convin’s AI with a live demo.

How LLMs and Other Generative Models Fit Contact-Center Workflows

This section brings together the deep comparison and the practical “where to use what” guidance. It examines LLM vs generative AI in real contact-center scenarios, reasoning tasks vs recognition tasks, cost vs accuracy, and where hybrid approaches shine.

Where LLMs Excel in Contact Centers

LLMs thrive when tasks require meaning, nuance, or context stitching. They are ideal for:

- Real-time agent assist (knowledge retrieval, objection handling).

- Auto-QA for policy detection and sentiment interpretation.

- Conversation summarization and coaching insight extraction.

- Natural-language search across call logs and dispositions.

These tasks depend on understanding not just classification, which plays to LLM strengths.

Use LLMs when the workflow mirrors human reasoning or depends on language nuance.

Where Other Generative Models Outperform LLMs

Many contact-center tasks are high-volume, pattern-driven, and require minimal reasoning making them better suited for smaller generative models. These include:

- Acoustic analysis (silence detection, talk ratio, tone).

- High-frequency intent tagging.

- Compliance scoring using deterministic rules + generative classifiers.

- STT pipelines optimized with speech models rather than LLMs.

These models are faster, cheaper, and more precise for structured tasks. For repetitive or audio-driven workflows, leaner models deliver superior ROI and reliability.

How the Two Approaches Complement Each Other

LLMs provide depth; smaller generative models provide speed and stability. LLMs interpret; classifiers predict. LLMs read; acoustic models listen. The smartest stacks orchestrate both, running the right model at the right time.

In contact centers, this balanced approach translates into lower cost, higher accuracy, and reduced hallucination risk. Hybrid architectures win because they align capabilities with workload demands, not hype.

In contact centers, LLMs excel when workflows demand reasoning, natural language understanding, and contextual stitching, while other generative models dominate high-volume, low-latency, pattern-driven tasks. The result is clear: modern CX teams achieve the best efficiency, accuracy, and cost control when they deploy hybrid AI, letting each model class do what it does best.

Through these applications, generative AI and LLMs significantly enhance the efficiency, personalization, and overall quality of customer service in call centers across various industries.

Spot latency bottlenecks in your AI stack.

How do you implement Generative AI and LLM successfully in Contact Centers?

Implementing AI in contact centers isn’t just about choosing a powerful model; it’s about matching the right model to the right workflow. LLMs bring reasoning and natural-language depth, while other generative models deliver speed, structure, and low-latency performance. A successful rollout blends both intentionally.

.webp)

1. Identify Objectives (LLM vs Generative AI Fit)

Start with goals that clarify whether you need reasoning (LLMs) or recognition/prediction (other generative models).

When LLMs fit best: Reducing AHT through contextual summarization, improving CSAT with personalized agent suggestions, or increasing resolution rates via deep conversational understanding.

- Example: In healthcare, LLMs help reduce patient wait times by delivering accurate, context-aware answers to symptom-based questions.

When other generative models are better: Handling high-volume repetitive tasks such as routing, intent detection, or simple transactional queries.

- Example: In fintech, operational queries like balance checks or status updates are faster and cheaper with lightweight generative classifiers.

Why this distinction matters: Objectives anchored in interpretation call for LLMs; objectives rooted in volume efficiency call for smaller generative models.

2. Data Preparation (Optimizing for Both Model Types)

Quality data determines whether each model performs reliably.

LLM needs: Rich conversational transcripts, diverse sentiment patterns, and detailed context markers so the model learns to reason and infer.

- Example: Software companies feed LLMs varied troubleshooting conversations to help the model generate accurate, step-by-step support.

Generative model needs: Clean, structured historical data, intent tags, acoustic markers, compliance flags so the model can make fast, high-confidence predictions.

- Example: In call centers, preparing labeled silence segments or escalation patterns strengthens acoustic or classification models.

Why this distinction matters: LLMs learn contextual nuance; smaller models learn repeatable patterns.

3. Choose the Right Model (The Critical LLM vs Generative AI Decision)

Choose LLMs when:

The task requires understanding long conversations, making sense of ambiguity, generating human-like dialogue, or stitching multiple data points into a coherent response.

- Example: In healthcare, only LLMs can understand a patient’s narrative and provide safely contextual guidance.

Choose other generative models when:

The task is structured, formulaic, or acoustic, where speed, determinism, and low cost matter more than language complexity.

- Example: In fintech, high-volume transactional queries or fraud pattern detection run better on lightweight generative models than on LLMs.

Why this distinction matters: LLMs specialize in language reasoning; generative models specialize in task efficiency and pattern reliability.

4. Integration (Human + AI, with the Right Model at the Right Layer)

Integrate AI so it augments human agents, not replaces them.

How LLMs integrate: Layer them into real-time agent assist, knowledge retrieval, dynamic scripting, and personalized coaching workflows.

- Example: In edtech, LLMs offer contextual suggestions to tutors based on how a student phrases their confusion.

How other models integrate: Embed them into routing engines, compliance scoring, acoustic analysis, and fast-response systems that require sub-second performance.

- Example: In call centers, generative models flag dead air, detect escalation cues, or classify call intent before an LLM ever processes the text.

Why this distinction matters: LLMs enhance cognitive tasks; generative models enhance operational throughput.

5. Training and Testing (Different Approaches for LLMs vs Generative Models)

Training LLMs: Use conversational datasets, diverse agent-customer patterns, and edge-case scenarios. This trains the model to handle nuance and variability.

- Example: Customer service teams simulate complex interactions such as refund disputes, layered complaints to sharpen LLM reasoning.

Training generative models: Use clear, structured, labeled examples at high volume to teach precise classification or acoustic detection.

- Example: In software support, thousands of repeated troubleshooting steps help smaller models classify issues instantly.

Why this distinction matters: LLM evaluation focuses on quality and reasoning; generative model evaluation focuses on precision and consistency.

6. Monitoring and Updating (Model-Specific Feedback Loops)

Monitoring LLMs: Watch for hallucinations, context drift, compliance misinterpretation, and prompt performance. Update prompts or fine-tune as regulations or scripts evolve.

- Example: Healthcare contact centers update LLMs regularly to recognize new medical terminology.

Monitoring generative models: Track accuracy, false positives, and latency. Retrain frequently as patterns shift.

- Example: Silence detection or escalation models must be recalibrated as call styles and agent behaviors evolve.

Why this distinction matters: LLMs require reasoning safety checks; generative models require performance recalibration.

Examples and Use Cases

- Software Industry: Implementing generative AI for automated code generation, bug detection, and resolution suggestions can enhance developer productivity. LLMs can be used to improve documentation and support chatbots.

- Healthcare: AI can analyze patient inquiries to provide instant, accurate information, reducing the burden on healthcare professionals and improving patient experience.

- EdTech: AI can generate personalized learning content or provide real-time student assistance, enhancing the learning experience.

- Customer Service: Across industries, AI can handle routine inquiries, allowing human agents to focus on more complex issues, thereby improving overall efficiency and customer satisfaction.

- FinTech: AI can assist in interpreting financial queries, providing investment advice, detecting fraudulent activities, and enhancing security and customer trust.

By focusing on these steps and adapting the approach to specific industry needs, call centers can leverage the power of Generative AI and LLMs to significantly enhance their efficiency, customer satisfaction, and operational outcomes.

Run a pilot for LLM-powered agent assist.

How Convin Harnesses Generative AI and LLMs for Superior Customer Support?

Convin is leveraging both Generative AI and LLMs across its suite of products to enhance customer service significantly in various ways.

Here's an in-depth look at how these technologies are integrated and the benefits they bring to customer interactions:

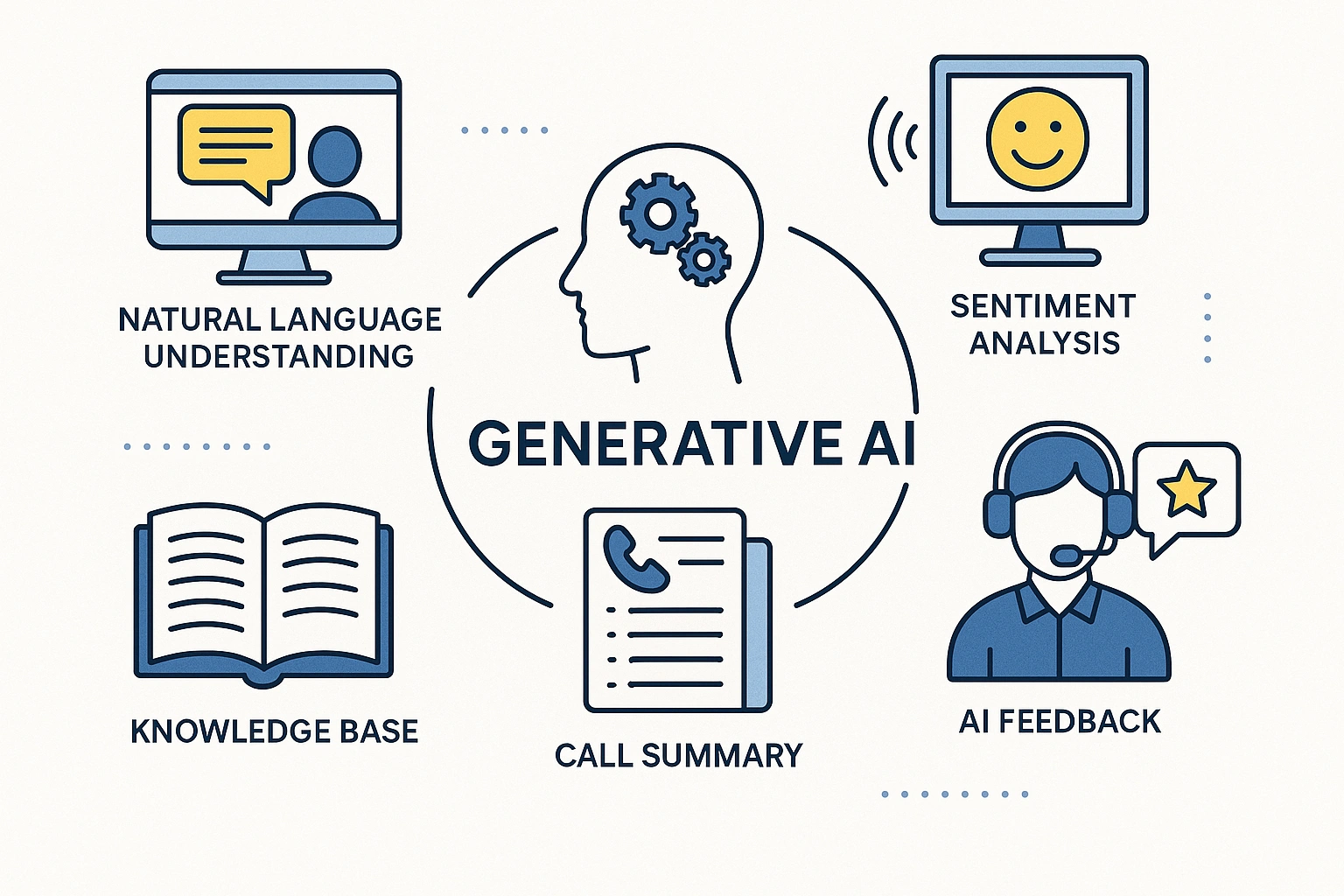

1. Natural Language Understanding (NLU)

Convin uses Generative AI to power its NLU capabilities, enabling the system to comprehend customer queries and interactions in a human-like manner. This understanding allows for more accurate responses and better customer service, as the system can interpret the intent and context of customer communications.

2. Sentiment Analysis

Through Generative AI, Convin's sentiment analysis tool can interpret and analyze the emotional tone behind customer conversations. This insight helps customer service agents understand their mood and tailor their responses to improve engagement and satisfaction, enhancing the overall customer experience.

3. Knowledge Base

Convin's knowledge base is enriched with Generative AI, which helps in automatically updating and expanding the repository of information. This ensures that customer service agents have access to the most relevant and up-to-date information, enabling them to provide quick and accurate responses to customer inquiries.

4. Call Summary

The platform utilizes Generative AI to generate concise and informative summaries of customer calls. This feature helps agents and managers quickly grasp the key points of each interaction, facilitating better follow-up, training, and quality assurance processes.

5. AI Feedback

AI Feedback in Convin leverages Generative AI to provide real-time suggestions and insights to agents during or after customer interactions. This feedback can include tips on improving communication skills, technical knowledge, or customer engagement strategies, thereby directly contributing to enhancing the quality of customer service.

By combining the predictive power of Generative AI with the interpretative capabilities of LLMs, Convin offers a holistic approach to improving customer service. This dual integration ensures that every customer interaction is informed, efficient, and tailored to the individual’s needs, significantly boosting customer satisfaction and loyalty.

In essence, Convin’s integration of Generative AI and LLMs is a game-changer in the realm of customer service, offering a suite of tools that not only enhance the efficiency and effectiveness of agents but also elevate the overall customer experience.

Schedule your Convin demo today!

FAQs

1. Are LLM and generative AI the same?

No, LLMs are a subset of generative AI focusing specifically on generating human-like text, while generative AI encompasses a broader range of content creation capabilities across various media.

2. What is the difference between GPT and LLM?

GPT (Generative Pretrained Transformer) is a type of LLM developed by OpenAI, designed to generate text. LLM (Large Language Model) refers to any large-scale model capable of understanding and generating human language.

3. What is the difference between LLM and traditional AI?

Traditional AI often focuses on rule-based or specific task-oriented processes. At the same time, LLMs leverage vast amounts of data to generate and understand human-like text, offering more flexible and generalized applications.

4. What is the difference between LLM and AI agent?

An AI agent is a system capable of autonomous actions in an environment to achieve designated goals, while an LLM is specifically designed to understand and generate human language.

5. Is a chatbot an LLM?

An LLM can power a chatbot if it uses a large language model to understand and generate human-like responses; however, not all chatbots use LLMs, as some operate on simpler, rule-based systems.

6. What does LLM mean in artificial intelligence?

In artificial intelligence, LLM stands for Large Language Model, a type of deep learning model trained on vast datasets to understand, interpret, and generate human language.

.avif)

.avif)