In the ever-evolving contact center industry, automation is taking center stage in quality assurance (QA) processes. AI-powered auditing tools promise speed, scale, and objectivity, but what happens when these systems become biased?

Contact center leaders now face a critical challenge: ensuring fairness in AI-driven evaluations without compromising performance or compliance.

Bias in AI refers to systematic inaccuracies in machine learning systems that result in unfair treatment during decision-making. In call audits, this manifests as inconsistent scoring, unfair coaching recommendations, and misclassified agent behavior, all of which are caused by underlying algorithmic flaws.

This blog will help you identify, detect, and eliminate bias in AI from your call audit workflows. You’ll discover real-world examples, learn actionable strategies, and explore how Convin ensures fairness in automated QA. If you care about ethical AI and consistent performance, keep reading.

Track audit performance using role-based reporting.

Defining Bias in AI and Its Importance in Call Audits

AI is revolutionizing contact center operations, especially in quality assurance (QA). But bias in AI remains a hidden threat to the integrity of call audits. If undetected, it skews agent evaluations, training, and business decisions.

Bias in AI occurs when the system treats different inputs unfairly due to flawed data or logic. This can be unintentional, emerging from biased training datasets or inadequate representation. In contact centers, this bias influences how AI systems audit calls, impacting both fairness and accuracy.

Fairness in AI Begins with Fair QA

Fairness in AI ensures that every agent and customer is treated equally during evaluations and assessments. QA audits should not favor certain accents, languages, or speech patterns over others. Yet many automated systems unknowingly apply skewed standards that impact agent scores.

- Fairness in AI ensures impartial scoring across voice, chat, and email interactions.

- It prevents penalization of agents due to dialects or regional communication styles.

- It helps meet compliance and ethical QA standards, reducing regulatory risk.

Organizations must champion fairness in AI to maintain trust in automated evaluations. Biased QA not only affects agent performance reviews but also impacts coaching strategies. As a result, fairness in machine learning becomes essential for ethical contact centers.

AI Bias Examples from Real Call Audit Scenarios

Let’s examine common AI bias examples in call auditing environments. These examples help identify how deeply embedded and often overlooked these biases are. Without rigorous detection, they may persist across thousands of interactions.

- Accent bias: AI may misinterpret or undervalue agents with regional or international accents.

- Sentiment misclassification: Emotion-detection tools might flag assertive tones as hostile or angry.

- Gender/racial bias: Training models on non-diverse datasets may skew results toward specific demographics.

- Script adherence bias: AI might overvalue scripted responses and penalize natural conversations.

Each of these AI bias examples highlights the importance of detecting bias in AI. Auditing AI tools helps uncover these systemic errors and adjust training accordingly. Fairness ethics require that systems evolve based on real, diverse data inputs.

Remove subjectivity from QA with Convin’s ML models.

How Bias in AI Affects Automated QA Processes

Bias in AI has profound effects on contact center operations, beyond just agent evaluations. From damaging morale to flawed training outcomes, its impact can be deeply organizational. QA automation, while efficient, must be constantly monitored for these blind spots.

Automated QA systems analyze interactions at scale, thousands of calls daily. If bias goes unchecked, it can skew results consistently across channels and agents. This undermines trust in AI systems, coaching outcomes, and compliance efforts.

Learn how Convin drives fairness in contact centers.

Bias in Automated QA: Real Threats to Contact Center Integrity

Bias in automated QA disrupts the core objective of quality assurance: fairness and accuracy. If AI incorrectly flags specific agents, it erodes internal trust and performance motivation. This affects not only individual agents but also team-wide KPIs and client deliverables.

- Biased scoring leads to inconsistent feedback, hindering performance improvement.

- Inaccurate QA reports may lead to incorrect coaching assignments or disciplinary actions.

- AI audits may underreport real performance threats due to narrow audit parameters.

Unchecked bias in AI breeds frustration among both agents and QA managers. Agents feel unheard, and managers struggle with ineffective coaching sessions. To prevent this, automated QA tools must incorporate built-in bias detection into their AI mechanisms.

Fairness in Machine Learning for Consistent Call Evaluations

Fairness in machine learning ensures algorithmic consistency across all evaluations. Machine learning (ML) models learn from historical data; if that data is biased, so are the predictions. Therefore, fairness in AI must be baked into both training and deployment stages.

- Ensure diverse, unbiased datasets when training models for call analysis.

- Conduct regular audits to verify that demographic or performance factors do not skew evaluation outputs.

- Use explainable AI tools to understand why certain decisions were made.

Call centers rely on data-driven insights to coach, train, and reward agents. Bias in AI disrupts this loop, making outcomes unreliable and inconsistent. Fairness in machine learning enables every agent to compete on a level field.

Audit faster using Convin’s speech-to-text transcription

This blog is just the start.

Unlock the power of Convin’s AI with a live demo.

Bias Detection in AI: Tools, Techniques, and Strategies

Detecting bias in AI requires a combination of automated and human oversight. Relying solely on black-box models can lead to unchecked discrimination in QA processes. Managers must use tools that provide transparency, traceability, and flexibility.

Bias detection in AI is an ongoing process, not a one-time audit. It involves testing AI models with various inputs and analyzing outputs for inconsistencies. Techniques such as fairness dashboards, human-in-the-loop reviews, and model retraining are key.

- Auditing AI Tools for Bias Detection

Auditing AI tools is the first step toward creating responsible automation systems. Contact centers should assess tools for bias using both technical and operational audits. Convin provides built-in transparency that supports this continuous auditing cycle.

- Run simulated conversations across demographics to test audit consistency.

- Use performance breakdowns to check if any agent group is scoring unusually low.

- Periodically integrate human audit checks to review the AI scoring logic.

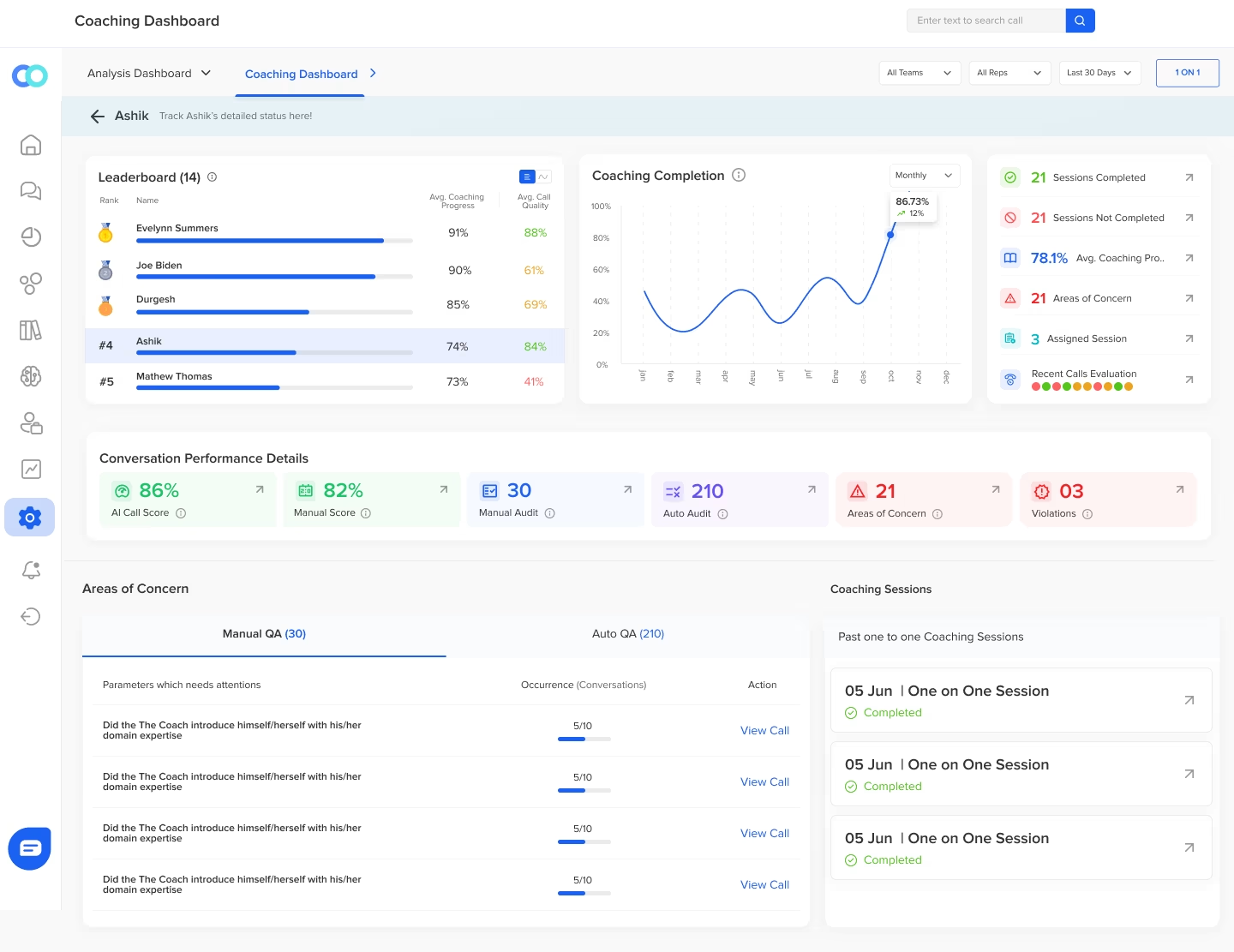

Convin supports automated quality management with both real-time and manual audit options. These tools help QA managers cross-validate AI performance without disrupting workflows.

- Ethics and Fairness in AI-Driven Contact Centers

Ethical AI frameworks ensure that QA systems respect human dignity and operational fairness. Fairness ethics involves maintaining transparency, avoiding discrimination, and aligning with established values. In AI-powered contact centers, this is essential for building trust and long-term credibility.

- Establish clear AI audit policies and make them readily accessible to all stakeholders.

- Involve diverse teams in developing scoring templates and rules.

- Provide explainable feedback to agents so they understand AI-generated evaluations.

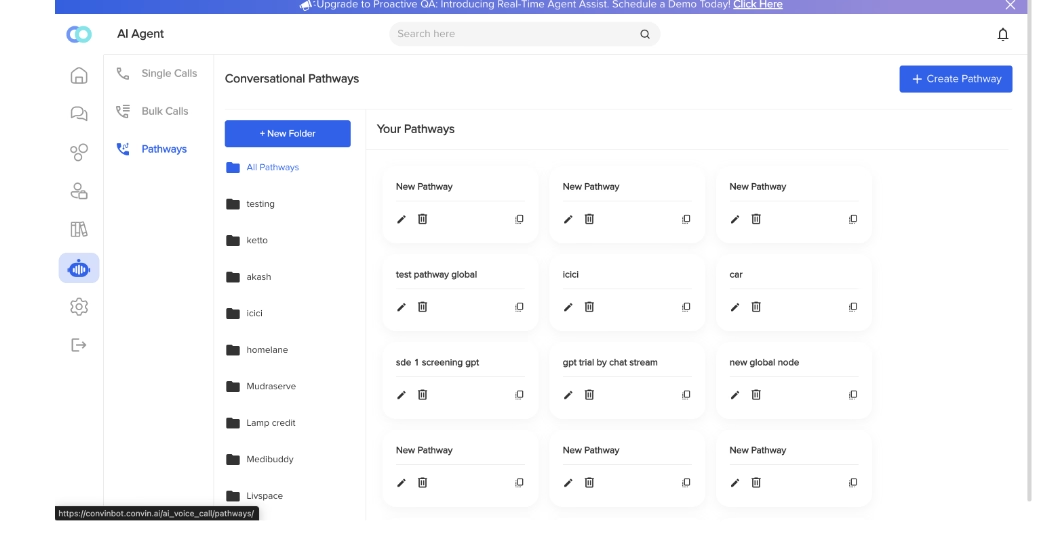

Convin promotes ethics in AI by enabling customizable QA scorecards. This allows businesses to align automation with their core values and compliance needs.

Spot audit flaws using Convin’s conversation insights.

How Convin Helps Eliminate Bias in AI Call Audits

Convin is uniquely built to identify and reduce bias in AI across every touchpoint. Unlike generic auditing platforms, Convin emphasizes fairness in machine learning from the start. It supports QA teams with detailed reporting, agent coaching, and human validation tools.

- Convin’s Features Supporting Fairness in Machine Learning

Convin ensures bias detection in AI is a continuous, real-time process. From real-time analysis to post-call insights, every feature is built to support fair outcomes. Its ML models are regularly tested for bias against diverse contact center scenarios.

- Custom Scorecards align QA audits with specific business and compliance needs.

- In-house speech-to-text models provide high accuracy, minimizing language-related misinterpretations.

- Real-Time Agent Assist provides in-call feedback that helps prevent errors early.

- Automated Coaching ensures agents receive unbiased, performance-specific learning paths.

Unlike other tools, Convin also supports manual audit layers for transparency. This hybrid approach ensures that AI-driven outcomes are always verifiable and transparent, ensuring fairness.

Read more on how Convin automates agent coaching with precise ethics.

- Real Use Cases and AI Bias Examples Solved with Convin

Convin’s technology has helped many industries tackle bias in AI and drive impactful outcomes. Here are some real-world examples where Convin eliminated bias in automated QA:

- Fintech Client: Achieved 21% sales boost through fairer coaching driven by unbiased audits.

- Healthcare Provider: Reported a 27% increase in CSAT after implementing bias-aware QA.

- E-commerce Giant: Reduced average handle time by 56 seconds with consistent scoring and coaching.

- Insurance Firm: Achieved 60% ramp-up time reduction using AI-powered coaching tailored to fair evaluations.

These case studies show the importance of bias detection in AI across customer-facing roles. Contact center leaders benefit from consistent performance metrics and improved agent satisfaction.

Benchmark agents fairly with Convin’s behavioral analytics.

Build Trust by Tackling Bias in AI Head-On

Bias in AI is a risk every contact center must manage as AI adoption grows. It undermines your QA audits, coaching, agent morale, and overall customer experience. However, with proactive detection, fairness in AI can become a strength, rather than a liability.

Embrace Bias Detection in AI for Ethical Contact Center Management

Bias detection in AI should be an integral part of your contact center’s digital strategy. It ensures every decision is transparent, equitable, and compliant. Adopting tools like Convin enables this transformation with ease.

- Use explainable AI tools to ensure fairness in automated QA.

- Monitor real-time QA results for inconsistencies across demographics.

- Involve human reviewers for periodic fairness checks in agent evaluations.

Contact centers that adopt fairness in machine learning not only improve metrics but also foster trust. Let your automation reflect your values: ethical, inclusive, and transparent.

Start now by investing in platforms built for fairness like Convin.

Promote Fairness Ethics Across Your QA and Coaching Programs

Fairness in AI extends beyond audits; it influences how teams are trained, rewarded, and promoted. Bias in automated QA affects more than scores; it shapes careers and customer loyalty. Make ethics a cornerstone of your AI-powered contact center.

- Include fairness training in your learning and development (L&D) curriculum for managers and agents.

- Use AI tools that generate explainable coaching based on unbiased inputs.

- Promote inclusive QA policies that align with the company's overall values and ethics.

Convin allows you to operationalize fairness ethics from QA to coaching. Its suite of tools brings together automation and empathy in every agent interaction.

Schedule a demo with Convin today!

FAQs

- What is bias in Gen AI?

Bias in Gen AI refers to the unfair outcomes generated by AI models due to the use of skewed training data or flawed algorithms. In customer service, this may result in unequal treatment of agents or misinterpreted customer sentiment during AI-powered call audits.

- What can bias in AI algorithms in CRM lead to?

Bias in AI algorithms within CRM systems can lead to inaccurate customer profiling, misjudged lead scores, and unfair agent evaluations. This not only affects customer experience but also damages trust in automated CRM insights.

- Which CRM has the best AI?

CRMs like Salesforce, HubSpot, and Zoho lead in AI capabilities, offering predictive analytics, automation, and intelligent insights. However, platforms like Convin integrate specialized AI to enhance QA audits, agent coaching, and performance tracking with minimal bias.

- How has AI affected customer service?

AI has transformed customer service by automating responses, personalizing interactions, and optimizing support workflows. Yet, bias in AI remains a concern, especially in agent evaluations and QA, where fairness and accuracy are essential.

.avif)