Did you know a large language model (LLM) can analyze and summarize entire novels in seconds?

Imagine harnessing this power for your call center!

Here’s a challenge: Can your call center reduce average handle time by 20% while boosting customer satisfaction?

With the power of a fine-tuned large language model (LLM), this isn’t just possible—it’s happening now. These advanced AI systems are revolutionizing knowledge management, chat suggestions, and feedback mechanisms in call centers.

As AI continues to evolve, fine-tuned LLMs are transforming how we manage knowledge bases, offer chat suggestions, and provide feedback.

This post will explore how large language models work, their applications, and their role as a type of generative AI model.

Stay with us to uncover the profound impact of LLMs on call center operations.

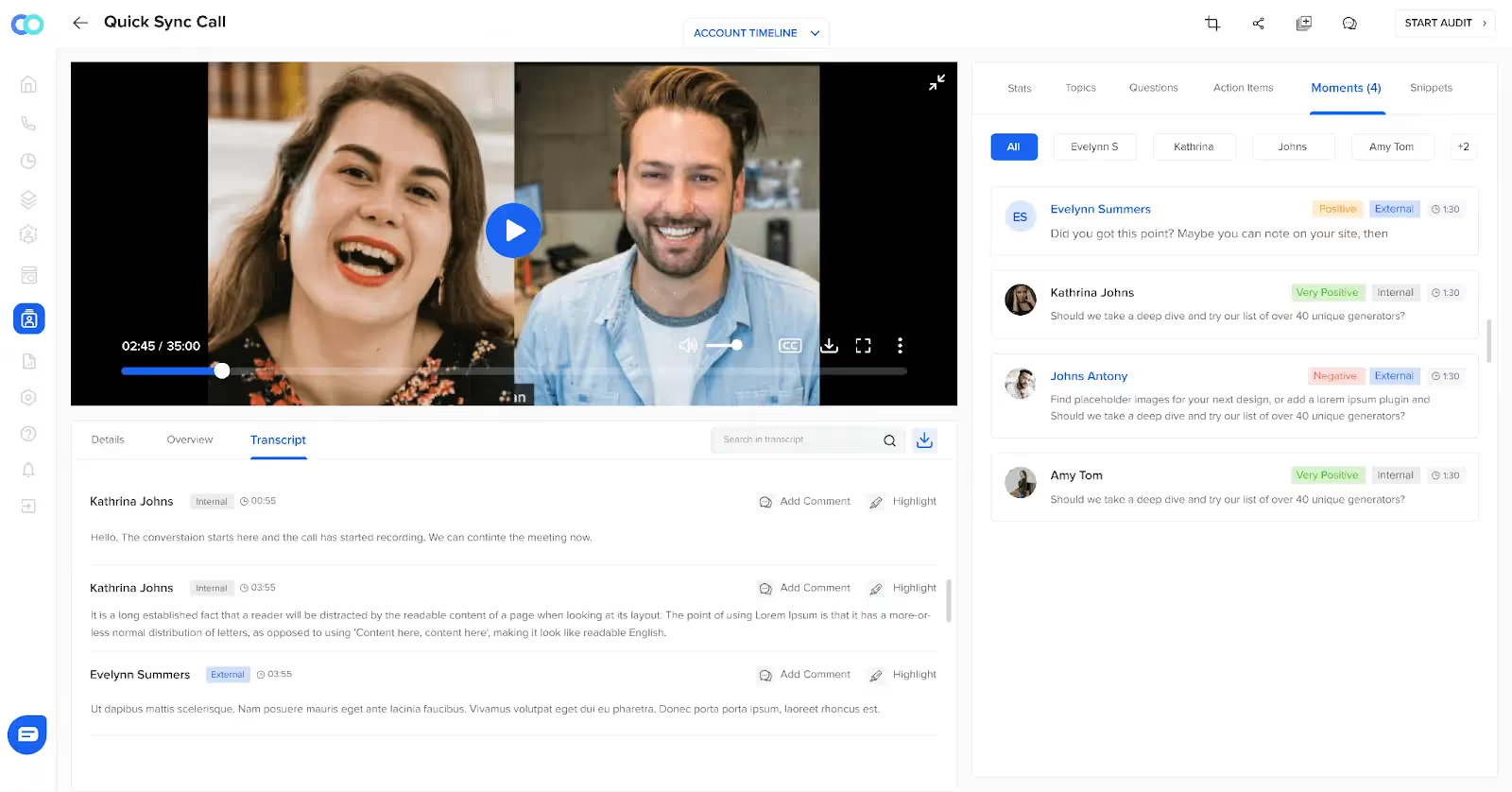

Analyze customer conversation data and extract insights with advanced LLM.

Limitations of Bypassing LLM Fine-Tuning

Have you ever wondered what would happen if we skipped fine-tuning a large language model (LLM)?

Let’s break it down interactively.

Bypassing fine-tuning might sound tempting to save time, but it comes with significant drawbacks:

Relevance and Accuracy

A generic large language AI model might produce responses lacking context-specific relevance. Imagine asking about a complex customer issue and getting a vague, unhelpful answer. Frustrating, right?

Performance Issues

Fine-tuning customizes the LLM to your specific data and use cases, significantly improving its performance. Without it, your LLM could underperform, giving generic responses rather than precise, valuable solutions.

User Experience

Unfine-tuned LLMs may fail to understand your industry's unique language and terminology. This leads to a poor user experience, where agents and customers struggle with miscommunications and misunderstandings.

Efficiency Loss

Fine-tuning helps optimize the LLM's capabilities for your particular needs, ensuring that it operates efficiently and effectively. You might face longer handling times and lower customer satisfaction rates without this.

Competitive Edge

Finally, skipping fine-tuning could mean losing a competitive advantage. A highly optimized and fine-tuned LLM can set your call center apart in today's fast-paced market.

So, while bypassing fine-tuning might seem like a shortcut, it’s clear that the benefits of a well-tuned LLM far outweigh the initial time investment.

What are your thoughts on this?

What are Large Language Models?

Large Language Models (LLMs) are a subset of artificial intelligence (AI) designed to understand, generate, and manipulate human language on a large scale. These foundation models are trained on massive datasets and can perform various tasks, including translation, summarization, question answering, and text generation.

Examples include GPT-3, BERT, and T5.

Fine-tuning large language models leverages machine learning to adapt pre-trained models to specific datasets, enhancing their ability to perform targeted tasks such as customer interaction and data analysis. This process ensures that the LLMs deliver high accuracy and relevance in real-world applications, optimizing call center efficiency and customer satisfaction.

How are They trained?

Pretraining LLMs involves two key stages: data collection and model training.

- Data Collection: LLMs are pre-trained on vast and diverse datasets from books, articles, websites, and other text-based resources. The datasets encompass multiple domains and contexts, ensuring the model learns a broad language understanding.

- Model Training: The training exposes the model to large volumes of text data, allowing it to learn language patterns, grammar, and context.

Two standard techniques are:

- Causal Language Modeling (CLM): The model predicts the next word in a sequence, learning to generate coherent text.

- Masked Language Modeling (MLM): The model predicts missing words in a sentence, improving its understanding of context and semantics.

During this phase, the model learns to recognize complex patterns in language, building a general knowledge base from the data it processes.

What is Fine-Tuning, and Why is it Necessary?

Fine-tuning a large language model (LLM) is like customizing a general-purpose tool for a specific job. It involves adapting the pre-trained LLM to better serve particular tasks or industries by training it on a targeted dataset.

This process significantly enhances the model's relevance and performance, making it highly effective for specific applications such as customer service in call centers.

Here’s why fine-tuning is essential,

- Relevance: Tailors the LLM to understand and generate domain-specific language, improving accuracy and context-specific responses.

- Efficiency: This optimizes the LLM’s operations for particular tasks, leading to faster and more efficient query handling.

- User Experience: Enhances the overall user experience by providing more precise and relevant information.

- Competitive Advantage: Provides a significant edge over competitors by leveraging a highly specialized AI system.

How are They Fine-Tuned?

Fine-tuning is adapting a pre-trained LLM to specific tasks or domains.

It involves several steps:

- Task-Specific Dataset: A smaller, more focused dataset relevant to the specific task is collected. This dataset is often labeled with the correct answers or classifications for the task, such as customer service interactions, medical records, or financial documents.

- Training Process: The pre-trained model is further trained on this specialized dataset. The process involves adjusting the model parameters to better suit the specific task.

Techniques used include:

- Supervised Fine-Tuning: The model learns to predict labels or outputs based on the provided training data.

- Parameter Adjustment: The model's weights and biases are fine-tuned to optimize the language model’s performance for the task, ensuring it leverages its general knowledge in a task-specific manner.

- Evaluation and Iteration: The fine-tuned model is evaluated on a validation set to assess its performance. Metrics such as accuracy, precision, recall, and F1 score are used to gauge effectiveness. Based on the evaluation, further adjustments may be made in an iterative process to refine the language model's performance.

- Deployment: Once fine-tuned, the model can perform the specific task accurately and efficiently. This adaptation makes the LLM highly effective in real-world applications where nuanced understanding and context-specific responses are crucial.

How Do Fine-Tuning LLMs Work?

Fine-tuning an LLM involves several detailed steps:

- Data Collection: The process begins with gathering substantial domain-specific data. This includes customer interaction logs, emails, chat transcripts, product information, and other relevant content.

The goal is to create a dataset that reflects the specific industry's language, terminology, and unique challenges.

- Training: The collected data is then used to train the LLM. During this phase, the model learns to recognize the domain's patterns, terminologies, and contextual nuances.

This training adjusts the model's weights and parameters, making it more adept at handling specialized queries.

- Evaluation and Adjustment: After training, the model's performance is evaluated using a set of criteria relevant to the specific application. These include accuracy, relevance, and response time. If the model’s performance is not satisfactory, further adjustments are made.

This iterative process ensures that the LLM meets the desired performance standards.

- Real-World Application: The LLM is deployed in real-world scenarios once fine-tuning is complete.

For example, in a call center, the fine-tuned LLM can provide real-time chat suggestions, summarize customer interactions, and generate insightful feedback, all tailored to the industry's specific needs.

Have you seen the difference a well-tuned LLM can make in your operations? What challenges could be addressed with better fine-tuning?

Integrate fine-tuned LLMs with Convin to enable real-time data processing.

This blog is just the start.

Unlock the power of Convin’s AI with a live demo.

Large Language Models and Fine-Tuning Methods

Advanced large language models (LLMs) like GPT-3, GPT-4, and BERT are transforming how businesses handle customer data. These foundation models are fine-tuned to specific applications, significantly improving various call center functions.

Neural networks are the backbone of large language models (LLMs), enabling them to learn and recognize complex language patterns through layered structures. Fine-tuning these neural networks with specific datasets allows LLMs to excel in tasks enhancing call center operations and customer interactions.

Here’s an in-depth look at how this process works and its applications in knowledge bases, summarization, feedback, and chat suggestions.

1. Knowledge Base Enhancement

Fine-tuned LLMs improve knowledge bases by efficiently organizing and retrieving accurate information tailored to specific queries. This customization ensures that agents can quickly find precise answers, improving response times and accuracy.

- Custom Data Training: LLMs learn the nuances and terminology unique to the industry by training on specific datasets related to a company's products and services.

- Efficient Information Retrieval: Fine-tuned LLMs can swiftly search through vast knowledge bases to provide accurate information to agents, reducing the time spent looking for answers.

- Dynamic Updates: The models can be continuously updated with new information, ensuring the knowledge base remains current and relevant.

With Convin's integration, LLMs are fine-tuned using your specific call center data, ensuring the AI understands your unique products and services. This means agents can quickly access accurate and relevant information, drastically reducing response times and improving service quality.

2. Summarization

LLMs trained on specific datasets can condense lengthy customer interactions into concise summaries. This saves time for agents and managers, provides clear, actionable insights, and improves overall efficiency.

- Data Processing: LLMs process large volumes of text data, extracting key points and discarding irrelevant information.

- Context Understanding: The models understand the context of conversations, allowing them to generate summaries that capture the essence of interactions.

- Actionable Insights: Summarized data helps managers quickly understand customer issues and agent performance, enabling timely decision-making.

Convin leverages LLMs to summarize long customer interactions into concise, actionable insights. This feature saves your agents and managers valuable time, allowing them to focus on resolving issues rather than sifting through lengthy call logs.

3. Feedback Mechanisms

LLMs analyze customer feedback by identifying patterns and sentiments specific to the business. This real-time feedback analysis helps quickly address customer concerns, leading to higher satisfaction rates.

- Sentiment Analysis: Fine-tuned LLMs can detect positive, negative, and neutral sentiments from customer interactions.

- Pattern Recognition: The models identify recurring themes and issues, providing insights into customer pain points.

- Automated Reports: LLMs can generate detailed feedback reports highlighting areas of improvement and successful interactions.

Fine-tuned LLMs with Convin can identify sentiment and provide detailed feedback reports by analyzing customer interactions. This helps you understand customer satisfaction and areas needing improvement.

- Chat Suggestions

By training on conversational data, LLMs can offer contextually relevant chat suggestions and responses in real-time. This feature aids agents in maintaining high-quality interactions and ensuring consistent service standards.

- Real-Time Assistance: LLMs provide agents with instant suggestions and responses based on the ongoing conversation.

- Contextual Relevance: The models understand the context and intent behind customer queries, offering appropriate and helpful responses.

- Improved Efficiency: Real-time chat suggestions help agents resolve issues faster, improving overall efficiency and customer satisfaction.

Convin’s real-time chat suggestions, powered by fine-tuned LLMs, enhance agent-customer interactions. The AI provides contextually relevant responses and suggestions, ensuring that your agents always have the right information at the right time. Natural language processing (NLP) powers fine-tuned large language models (LLMs) by enabling them to understand and generate human-like text.

Which Pre-Trained Model is Most Suitable for Fine-Tuning?

Choosing a suitable pre-trained large language model (LLM) for fine-tuning is crucial for optimizing performance.

Let’s explore this,

- GPT-3 and GPT-4: These OpenAI models are among the most popular due to their versatility and extensive capabilities. They excel at generating human-like text and can be fine-tuned for specific applications such as customer service, content creation, and more.

- BERT and its Variants (RoBERTa, DistilBERT): BERT, developed by Google, is particularly adept at understanding the context of a given text. Its variants offer different balances between performance and computational efficiency, making them ideal for tasks like text classification and sentiment analysis in call centers.

- T5 (Text-to-Text Transfer Transformer Model): Google’s T5 is versatile and can be fine-tuned for various tasks by converting them into text-to-text format. It’s excellent for summarization and translation tasks.

- FLAN (Few-Shot Learning Model): Google's FLAN is designed to perform better in few-shot learning scenarios, making it suitable for applications where annotated data is scarce.

Choosing the Right Model

The choice depends on the specific needs of your application:

- For Generating Responses: GPT-3 or GPT-4 is highly effective due to its ability to generate coherent and contextually relevant text.

- For Understanding Context: BERT and its variants are great for tasks requiring deep contextual understanding.

- For Versatile Text Tasks: T5’s flexibility in handling various text-to-text tasks can be very advantageous.

- For Limited Data Scenarios: FLAN is optimal with limited training data.

Selecting the right pre-trained model for fine-tuning is crucial for optimizing LLM applications. Choose the statistical model that aligns with your needs to enhance your call center's performance.

How Does the Advanced LLM Model Analyze Customer Data?

The advanced LLM model analyzes customer data by processing and interpreting large volumes of interactions across various channels, identifying patterns, sentiments, and compliance issues. It uses natural language processing and machine learning algorithms to provide actionable insights and improve customer service quality.

Here's how this process works, which often invests in LLMs to analyze call center conversations:

- Identify Goal: Determine the specific use case or goal, such as improving customer satisfaction or reducing call handling time.

- Data Collection and Preprocessing: Gather and clean data from various sources, removing noise and irrelevant content. Tokenize the text to help the model process it effectively.

- Pre-training: Train the model on large datasets to develop a deep understanding of language patterns.

- Fine-tuning: Adjust the model's parameters using a task-specific labeled dataset, improving its accuracy and relevance to the task.

- Training Strategy: Use efficient training methods, such as Low-Rank Adaptation (LoRA), to manage computational complexity and cost.

- Supervised Fine-Tuning: Enhance the model's performance on tasks such as question answering, sentiment analysis, and summarization.

LLM integration in call centers enhances efficiency, customer satisfaction, and performance, outweighing challenges. Adopting technical expertise in generative AI in industries like BFSI is crucial for future-proofing and competitiveness.

Customize your LLM with any required data sets for optimized performance.

Staying Ahead with AI-Powered LLM Technology

Integrating fine-tuned large language models (LLMs) with Convin can revolutionize your call center operations. These advanced AI models boost efficiency and elevate customer satisfaction, making your call center a productivity powerhouse.

Increase the size of your call center with AI's power, and watch how these modern apps enhance business processes. Get started with a demo today to see how Convin’s integration with fine-tuned LLMs can improve your operations and customer satisfaction.

Frequently Asked Questions

1. What is LLM in generative AI?

An LLM (Large Language Model) in generative AI is an advanced algorithm that generates human-like text, leveraging deep learning techniques to understand and produce language.

2. How does LLM fine-tuning work?

Fine-tuning involves training a pre-trained LLM on a specific dataset to adapt responses to particular tasks or domains.

3. When should you fine-tune LLMs?

Fine-tune LLMs when you need them to perform specific tasks or understand domain-specific language and nuances.

4. What is fine-tuning LLM domain specific?

Domain-specific fine-tuning customizes an LLM to understand and generate text relevant to a particular field or industry.

5. What are the metrics for LLM fine-tuning evaluation?

Depending on the specific task, common metrics include accuracy, precision, recall, F1 score, and loss.

6. How does model fine-tuning work?

Model fine-tuning adjusts the weights of a pre-trained model using a smaller, task-specific dataset to improve performance on particular tasks.

7. What are the problems with fine-tuning LLM?

Issues include overfitting, high computational costs, and the need for large, high-quality datasets.

8. How much data is needed for LLM fine-tuning?

The amount varies, but tens of thousands of examples are typically needed for effective fine-tuning.

.avif)

.avif)